Using the table Command

What is the table Command? Splunk’s table command is essential for formatting results on dashboards and in searches. The table command enables Splunk users to

What is the table Command? Splunk’s table command is essential for formatting results on dashboards and in searches. The table command enables Splunk users to

While the Splunk command rename is an overall simple command, it is an essential one for making dashboards and reports easy for your users to

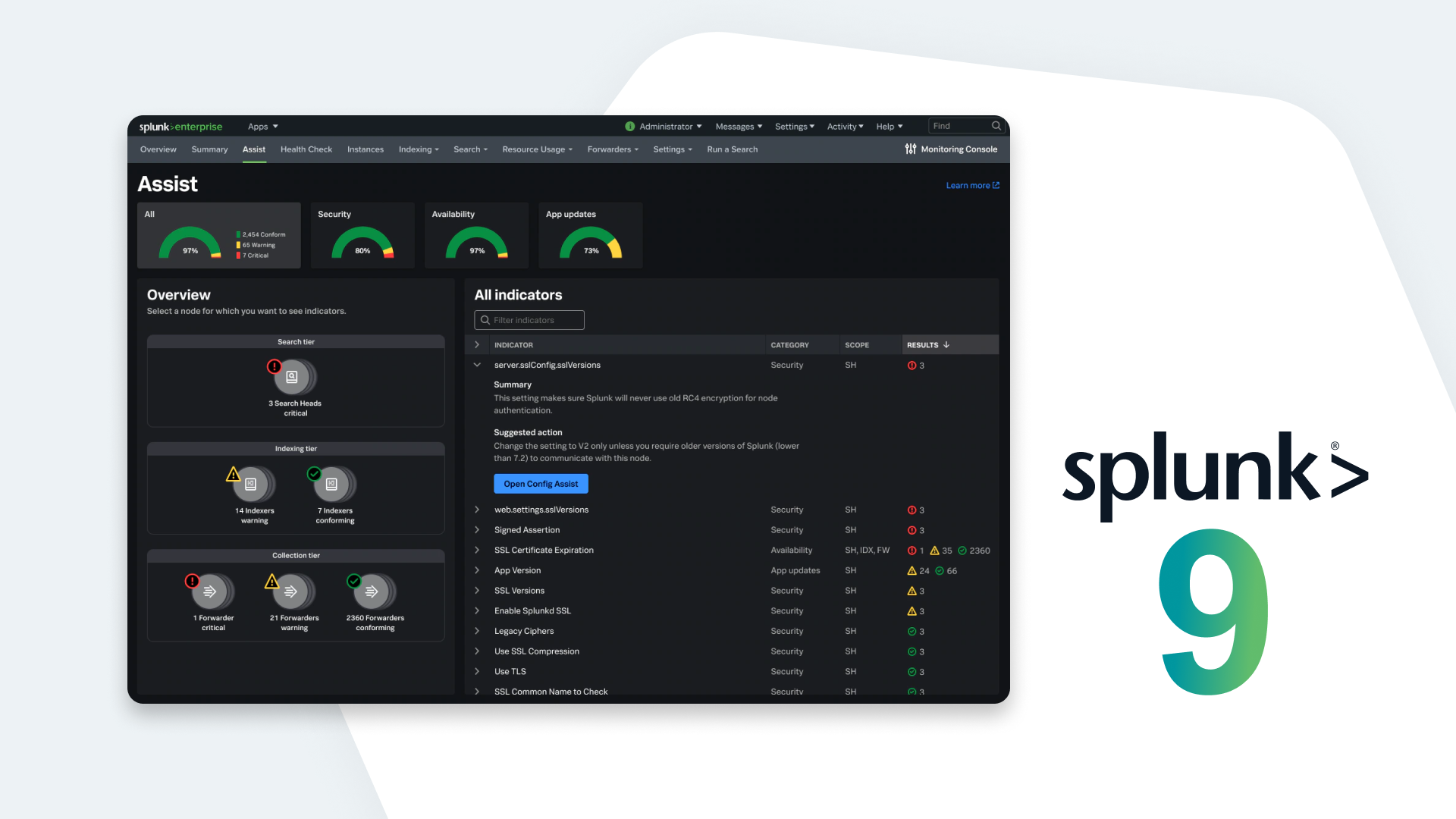

The wait is over and Splunk 9 is officially here! This release introduces a number of features and improvements aimed at making life easier for

Cluster Bundles are packages of knowledge objects that must be shared between indexers and search heads in clustered environments. Unfortunately, these can get too big